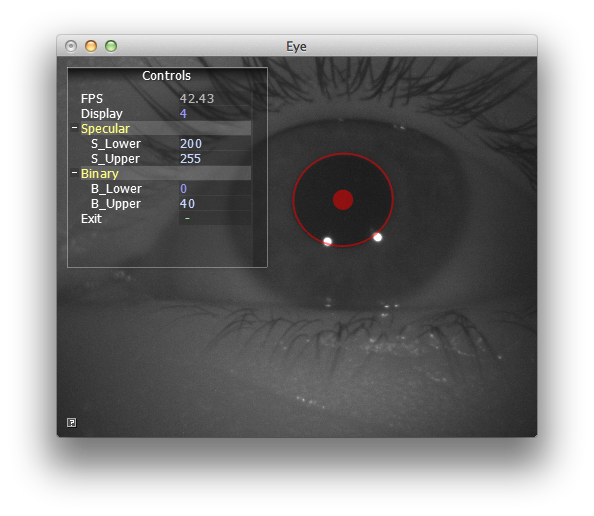

Exploration with pupil tracker

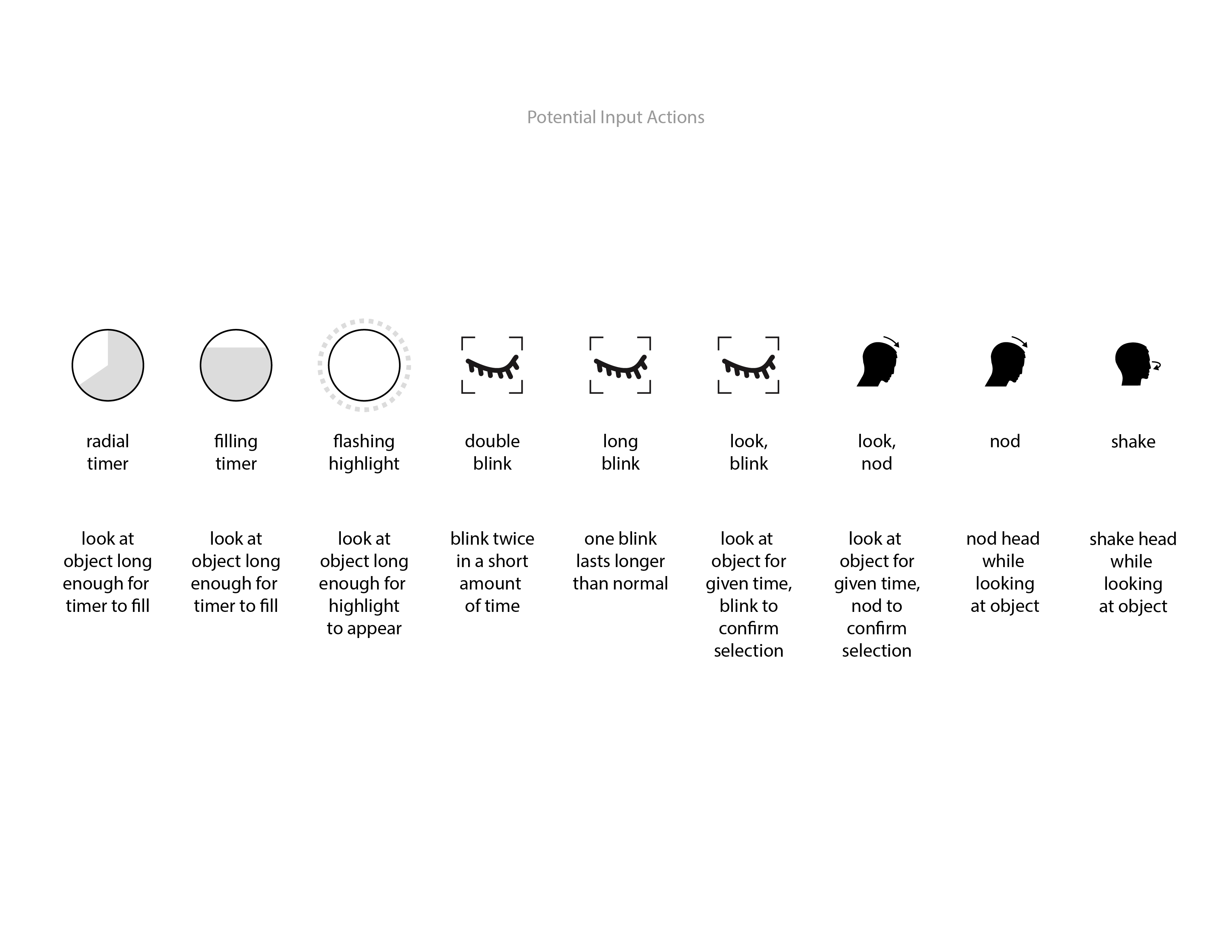

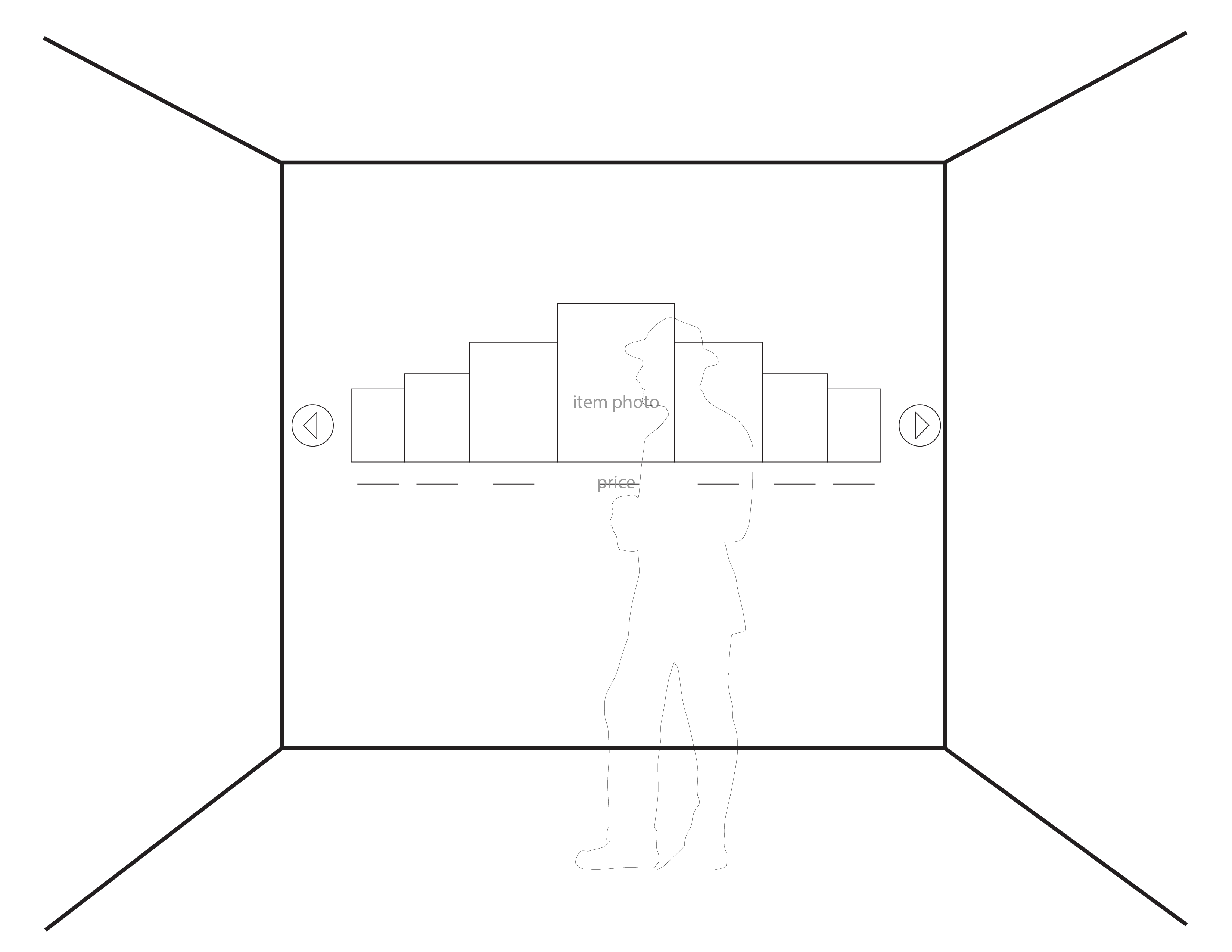

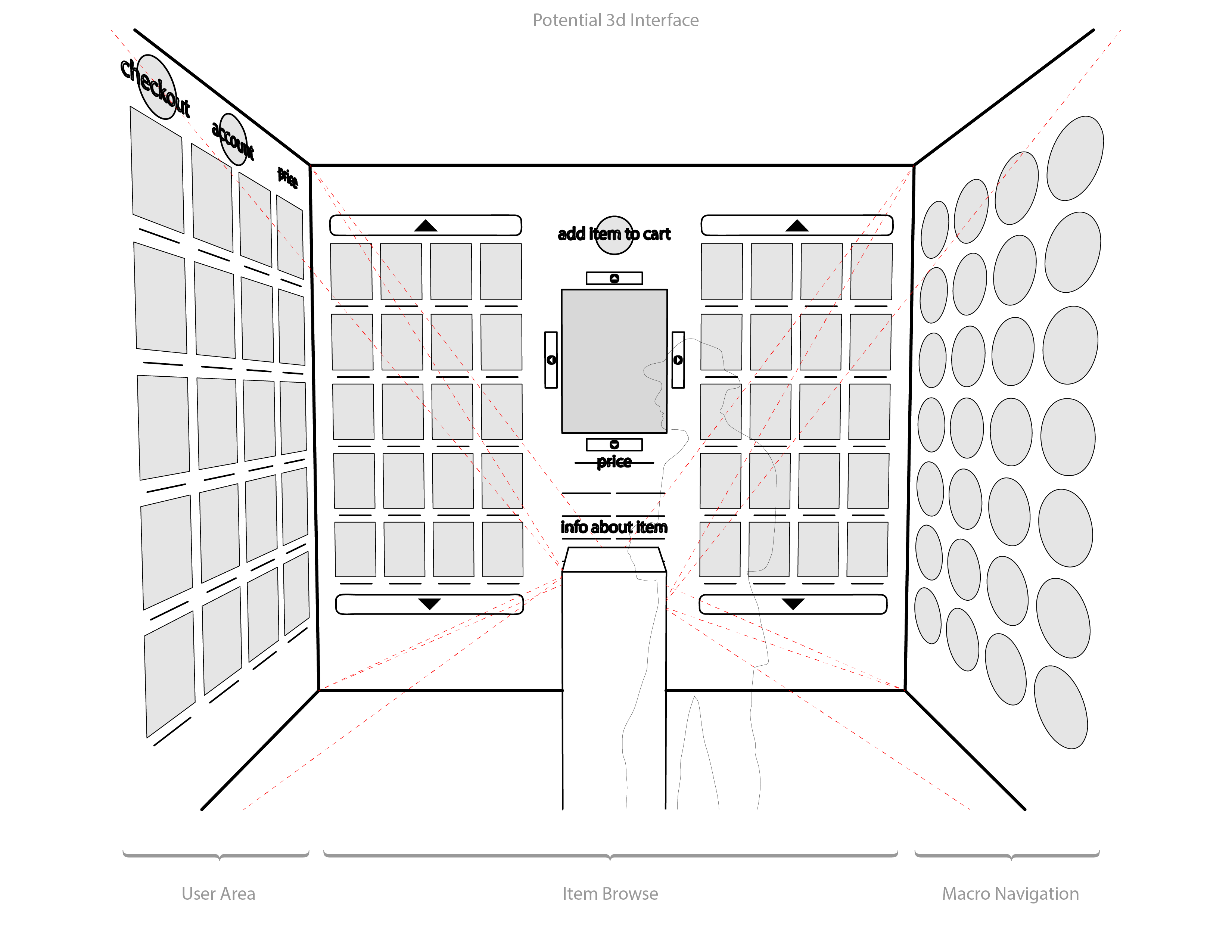

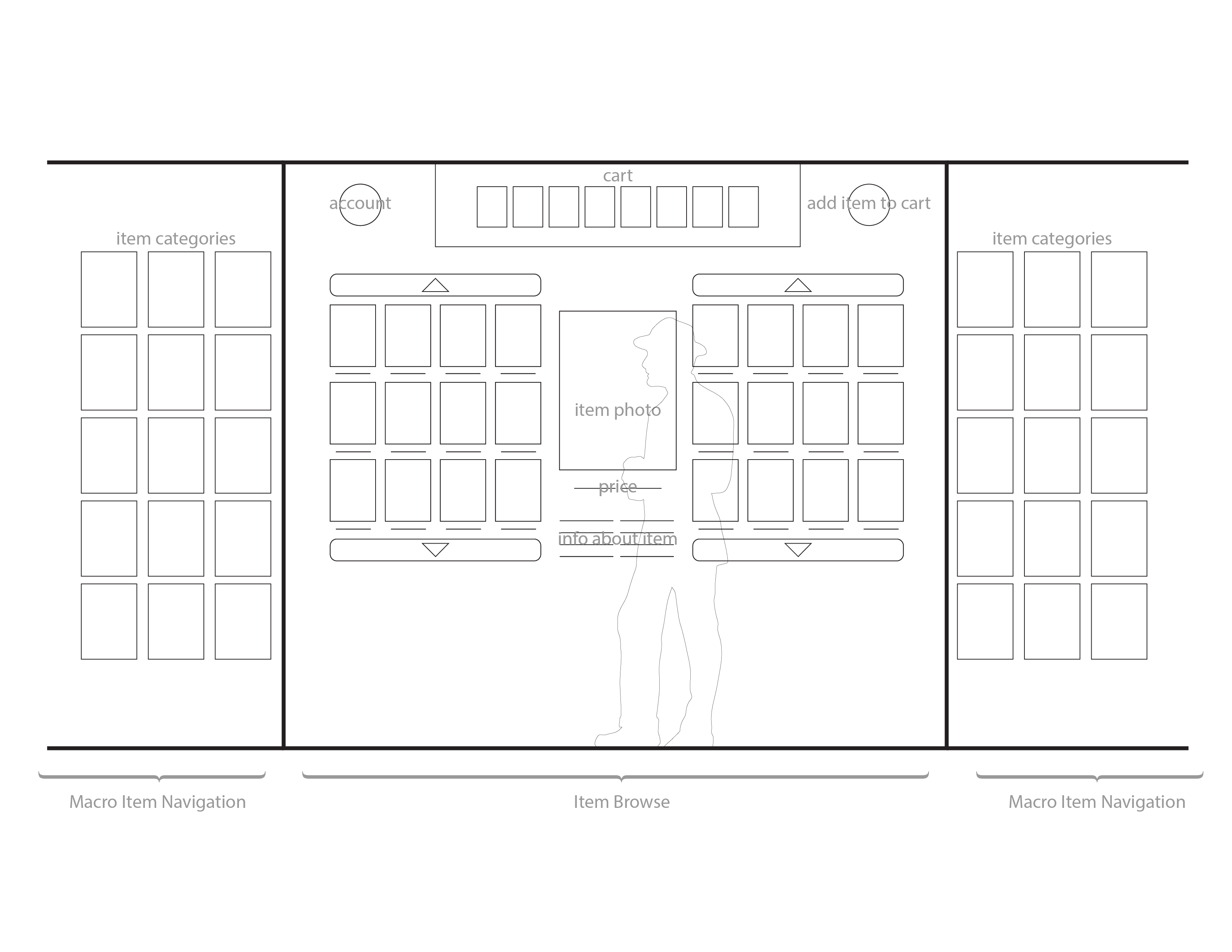

For many UX designers, Google Glass evokes visions of an Iron Man-like interface with numerous controls and augmented reality features. For other designers, Googles Glass features might be intrusive or not very practical in real world settings. After using Glass for a week, I began to wonder if I could change the interface or gestures to interact with what I would normally do. What would it mean if our environment was able to have a bilinear communication with my device? In here I explore the use of gaze-based interaction as a less intrusive way to interact with the device in public environments. Below are some diagrams illustrating the gestures in relation to large displays:

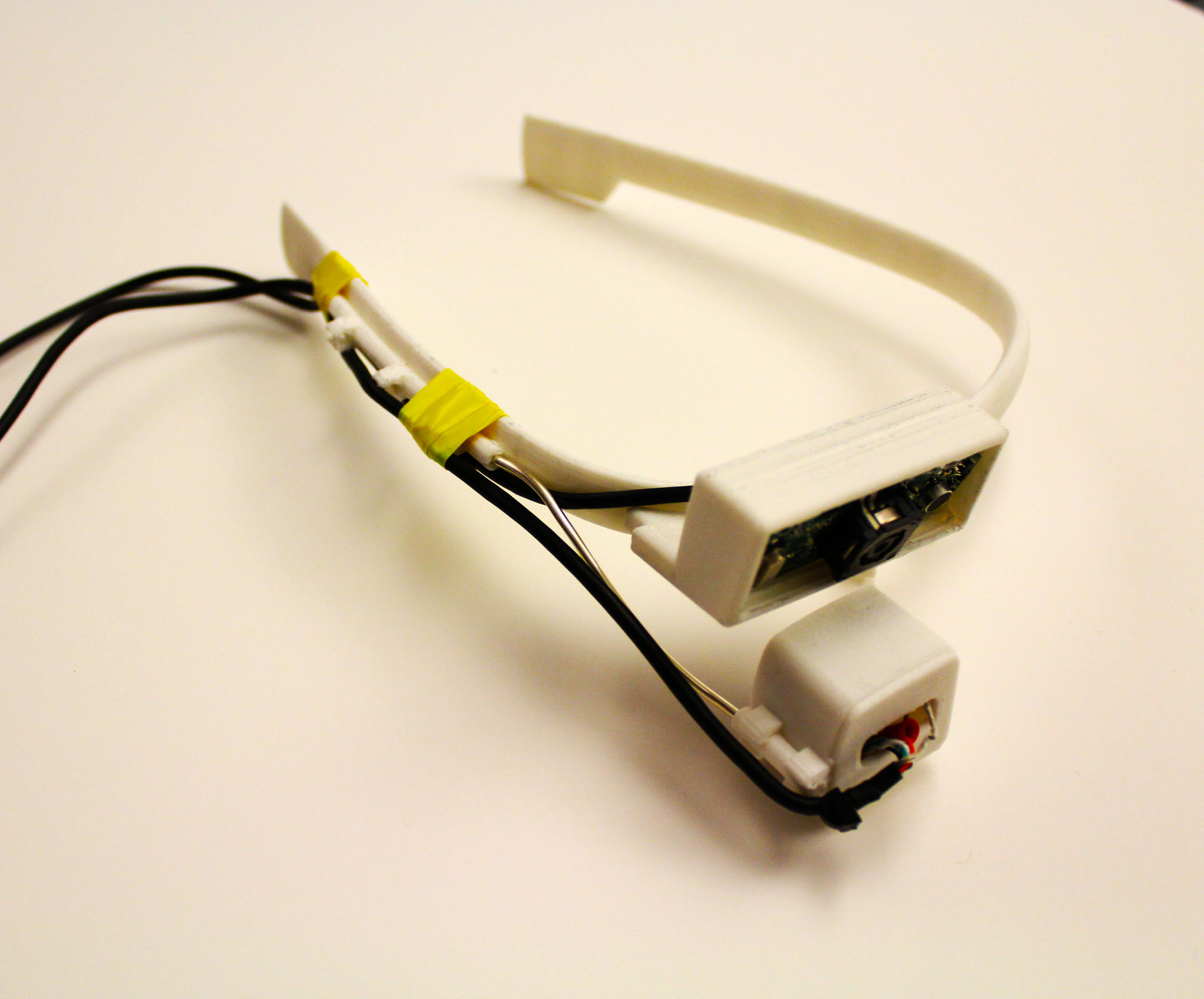

The work explored here was based on the Design and Computation SMArchS thesis Pupil: Constructing the Space of Visual Attention, co-authored by Moritz Philipp Kassner and William Rhodes Patera who are now part of the company Pupil Labs

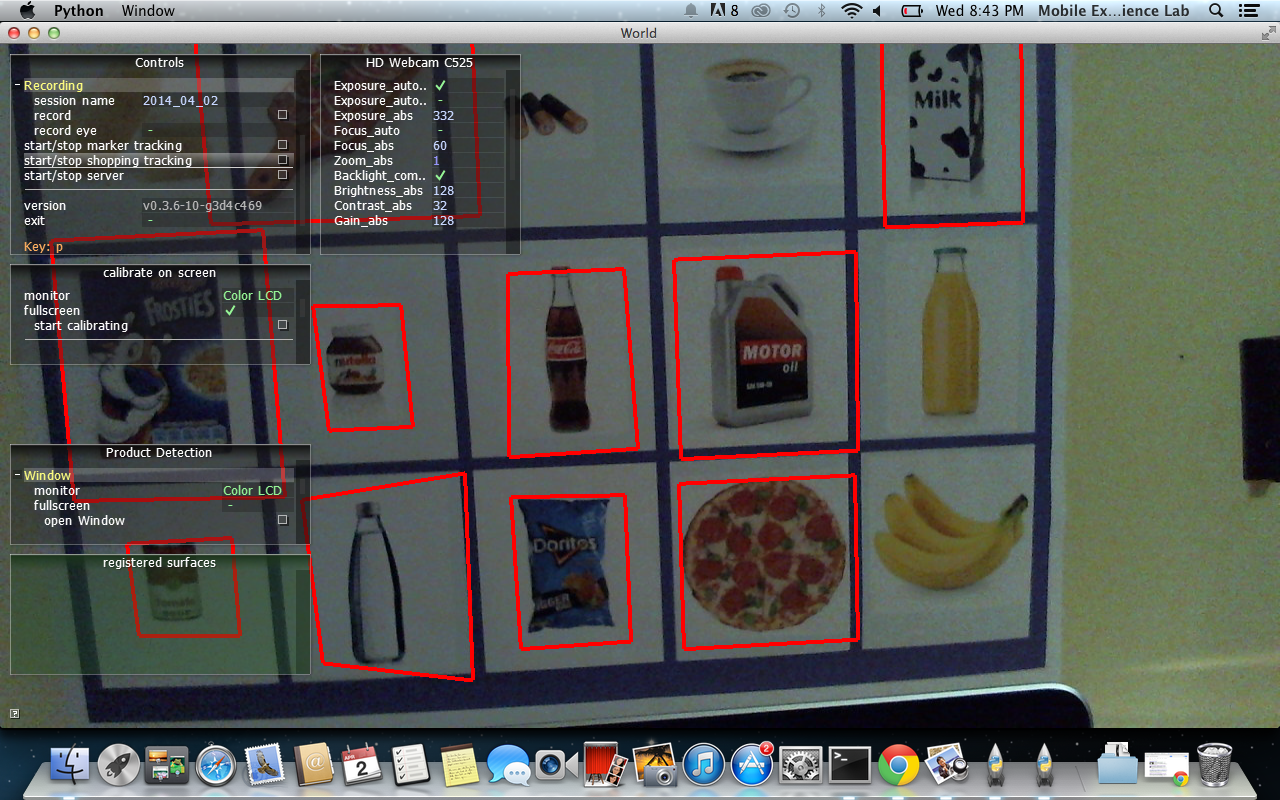

The next steps were to begin to interact with our surroundings. We built an application that would take products and add them to your shopping cart using the gestures shown above.